Developer tools that are worth their while: KEDA and Boundary in action

Running cloud-native platforms efficiently while keeping them secure and accessible requires thoughtful tooling. In this post, we'll explore two open source tools, KEDA and Boundary, that we use at MarkovML and Kapstan, and how they can level up your platform and remove friction for engineers.

Introduction to MarkovML

MarkovML is a platform that allows users to register datasets and run analytics like clustering algorithms on them. It provides capabilities to find similar data points between registered datasets and external data.

Problem Statement

At MarkovML, when a user runs a clustering analysis, we need to store the extracted vector embeddings from the analysis into our vector database for recommendations. Rather than performing this insert as part of the main ECS analytics job, we would like to offload it asynchronously using SQS.

We then need to set up a separate Kubernetes job that processes these events, and ideally, the Kubernetes job can scale up and down depending on queue length.

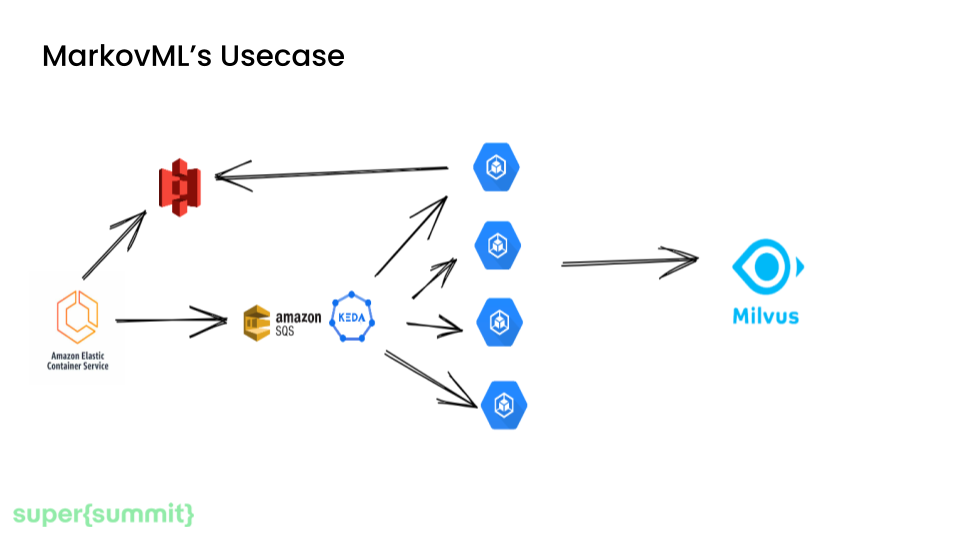

The way we ended up architecting it is to have the main analytics job save the output vectors to S3, then send an SQS message that triggers a separate Kubernetes job managed by KEDA. This job consumes the vectors from S3 and indexes them in our Milvus database.

Introduction to KEDA

So what is KEDA and why do we need it?

KEDA stands for Kubernetes Event Driven Autoscaling. It is a tool that allows Kubernetes clusters to automatically scale workloads up and down based on events from external sources like Kafka, AWS services (SQS, S3), Redis, MySQL.

So for example: let's say you have a stream processing application that analyzes user activity events from Kafka and outputs aggregated data to a database. The normal workflow is:

- User activity events stream into a Kafka topic.

- Your Kubernetes application consumes these events, analyzes them, and outputs aggregated data.

- The aggregated data is inserted into a database for reporting.

The challenge is the stream volume varies a lot throughout the day based on user traffic.

With KEDA, you can configure autoscaling on your stream processing app to scale up and down based on the number of messages in the Kafka consumer group (lag).

KEDA integrates natively with Kubernetes and works alongside the standard Horizontal Pod Autoscaler (HPA). It can scale up the processing pods during peak traffic to handle the increased load, and scale down when traffic is low to save resources - even down to 0 pods.

By leveraging KEDA for Kafka autoscaling, you can build an efficient and reactive stream processing pipeline that minimizes resource usage and costs. The scaling aligns closely with the real workload rather than just CPU/memory.

KEDA Operators

In KEDA, Scalers and Operators work together to enable autoscaling based on external data sources:

- Scalers - These plugins connect KEDA to external data sources like Kafka, SQS, MySQL etc. and expose metrics like queue length, lag, row count etc. There are many built-in scalers for common sources. You can also create custom scalers.

- Operators - These components work along with the Horizontal Pod Autoscaler (HPA). They consume the metrics exposed by Scalers and calculate if the replicas need to be scaled up or down based on the thresholds defined in the ScaledObject resource.

For example, the AWS SQS scaler connects to an SQS queue, gets the approximate queue length and exposes it as a metric to KEDA. The configuration is simple YAML-based ScaledObjects that define how to scale.

The KEDA operator consumes this metric. If the queue length is above the threshold defined in the ScaledObject, it will signal HPA to scale up the target deployment to handle the queue depth.

For example, to scale based on SQS queue length, you would write this YAML:

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: sqs-processor

spec:

scaleTargetRef:

deploymentName: sqs-worker

pollingInterval: 30

coolDownPeriod: 300

minReplicaCount: 0

maxReplicaCount: 20

triggers:

- type: aws-sqs-queue

metadata:

queueURL: https://sqs.us-east-1.amazonaws.com/account/queue

queueLength: "20"

This scales the sqs-worker Deployment between 0 - 20 pods based on the queue length.

The scaler and operator separation allows KEDA to plug into many types of data sources and leverage HPA for the actual scaling logic.

—

Introduction to Kapstan

Kapstan is a no-code tool for infrastructure provisioning - it allows developers to do things like converting their existing AWS resources to Terraform templates, all without having to write any code.

At Kapstan, we use Boundary internally to access to our own infrastructure and internal tools. Our product also has the capability to set up Boundary for our clients to ensure their infrastructure is accessible in a simple and secure way.

Introduction to Boundary

Critical services often run on private networks for security. But developers also need access to debug issues in production. This causes tension between security and productivity.

Typical solutions like VPNs and bastion hosts have downsides: VPNs provide access but grant broad access to private networks and resources, which means that you have to be careful in managing who gets access. There is also the issue of onboarding and offboarding - managing keys can become a huge time-sink as a company grows and employees join and leave.

Enter Hashicorp’s Boundary. Boundary is a tool that helps securely manage access to infrastructure and resources that run privately, like servers in a VPC with no public internet access. It solves common access issues faced by developers and ops teams in a simple yet secure way.

Rather than handing out VPN credentials or SSH keys that provide broad network access, Boundary integrates with your organization's identity provider, like Okta or Azure Active Directory. It uses your existing user identities and roles to grant permissions to specific resources, like a private database.

Developers can log into Boundary with their own credentials and access allowed resources. This permissioning is set up in a role-based fashion - you can grant a user read-only access or restrict queries, for example.

Each session generates a unique certificate and cryptographic key rather than relying on static, long-lived keys. After a session completes, the certificate and cryptographic key are immediately revoked.

Boundary architecture

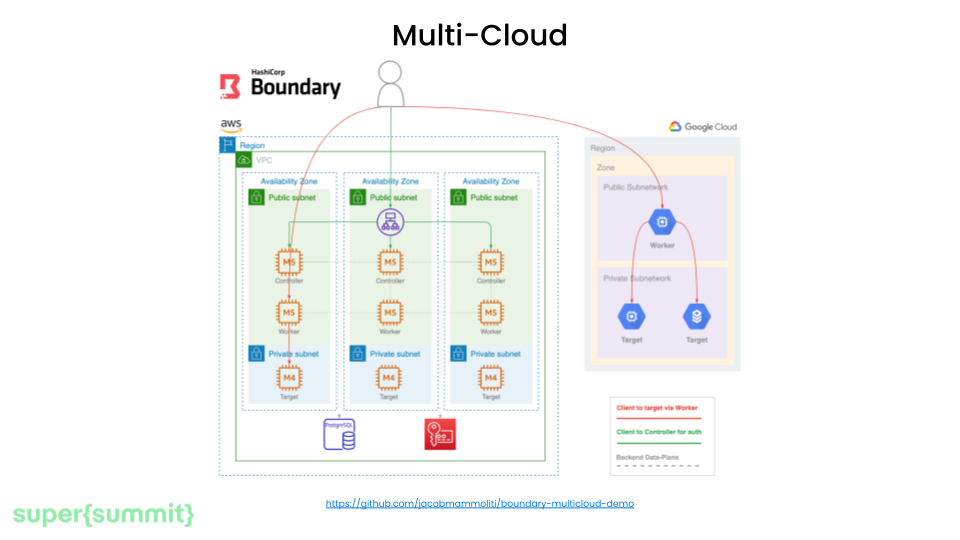

Boundary consists of two key components - the worker and the controller. The worker proxies connections to target resources. The controller handles authentication, configurations, and is the main user-facing interface.

The worker and controller have a decoupled architecture. This means that the controller backend can run fully, for example, on AWS while workers connect from GCP, Azure, on-prem data centers, etc, which simplifies managing access across multi-cloud. You don't need separate Boundary deployments everywhere.

Finally, Boundary also runs a local agent so you can use your existing tools like pgAdmin without reconfiguration.

Should you use Boundary?

Boundary is a good fit for organizations that need to manage secure access across multi-cloud environments. Its cloud-agnostic model allows consistent policies across on-prem, AWS, Azure, GCP, and other infrastructures.

You might also consider using Boundary if you need robust session auditing and logging capabilities to monitor user activity, or if your team is extremely security conscious and wants to operate on a “least-trust” security model.

Boundary is not expensive, but you do need to run an ec2 instance or a Kubernetes pod, and a database, so you should only use it if you can justify the operational cost.

Conclusion

By using tools like KEDA and Boundary together, you can ensure that your platform is running both effectively and securely, enabling your developers to focus on building rather than optimizing.

Watch Chris Fellowes' entire session from super{summit} 2023 below (alongside Akshaye Srivastava) and learn more about super{summit} at superset.com/summit!

Tech, startups & the big picture

Subscribe for sharp takes on innovation, markets, and the forces shaping our future.

Let's keep in touch

We're heads down building & growing. Learn what's new and our latest updates.